double[][] input_sequence = {{0, 0}, {0, 1}, {1, 0}, {1, 1}};

int[] target = {0, 1, 1, 0};

double miu=0.1;

int[] hidden_unit={5};

int epoch=10000;

NN nn = clib.NeuralNetwork(input_sequence, target, miu, hidden_unit);

//To see MSE Gradient Descent

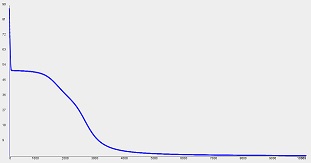

nn.Learning(epoch, true);

-------------------------------------------------- 10000 epoch

..................................................

Iteration for learning: 10000 epoch

Final MSE: 0.0037

Accuracy: 100.0%

Error ratio: 0.0%

<Learning process done>